To many, 2024 is the Year of Democracy, a time when billions of people will go to the polls in over 65 elections across the world, giving us the biggest elections megacycle so far this century. To others, it’s the Year of AI, when the rapidly developing technology truly went mainstream. And for autocracies like China, some worry it could be both — a year of unbridled opportunities to use AI to manipulate the outcomes of some of the world’s most consequential elections.

“Generative AI is a dream come true for Chinese propagandists,” wrote Nathan Beauchamp-Mustafaga, Senior Policy Researcher at RAND, in November last year. He predicted the PRC would quickly adopt AI technology and “push into hyperdrive China’s efforts to shape the global conversation.” A few months later, AI-generated content from China attempted to influence Taiwan’s presidential elections by slandering leaders of the ruling Democratic Progressive Party, which champions Taiwan’s autonomy and separate identity.

Since then, China’s AI capabilities have been rapidly developing. Tech firms have rolled out an array of video generation tools — freely available, easy to use, and offering increasing levels of realism. These could allow any actors, government-affiliated or private, to generate their own deepfakes. But despite concerns about the potential for AI-generated fake news from China, there has been little investigation into what is currently possible.

At CMP, we tried to generate our own deepfakes to find out. In the end, we found that the process is simple and fast — with results that, while imperfect, suggest the potential implications of AI for external propaganda and disinformation from China are immense.

Working Out the Fakes

We gave ourselves a few caveats. Firstly, these tools had to be free and simple to use. This would cater to the lowest common denominator: an ordinary Chinese netizen without coding skills or funding, just a desire to spread disinformation. It’s likely that government-affiliated groups would be far more professional — assigned a budget, VPN, higher-quality tools, and technical expertise — but we wanted to determine how low the bar currently is. Our question: What is possible in China today with the minimum amount of effort and resources? This is also why we limited ourselves to tools available on the Chinese internet, within the Great Firewall.

With US presidential elections just around the corner, we set out to create a video of Republican candidate Donald Trump announcing that, if elected, he would withdraw the US from NATO.

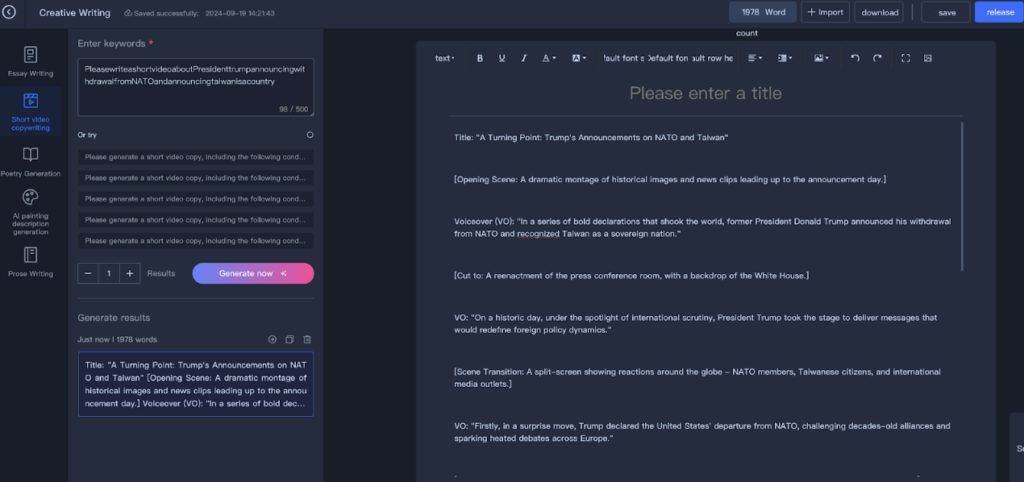

For the script, we turned to Zhongke Wenge (中科闻歌), an AI company chaired by a member of the National Development and Reform Commission. The platform has a setting that generates scripts for short videos. We soon had a minute-long script, including lines for our fake Trump.

We then turned to ElevenLabs, an AI voice generation company, to narrate the script. Despite being a US platform, ElevenLabs is available within China, according to Chinese firewall database GFWatch. It’s not far-fetched at all to combine Chinese and non-Chinese tools: social network analytics company Graphika identified one pro-China influence campaign as using AI-anchor tools from UK company Synthesia, whose tools are also available within China.

For Trump’s voice, a search on Chinese video streaming platform Bilibili yielded a video on voice cloning websites. This led us to Fish Audio, a website available in China has a ready-made Trump voice cloner. All we had to do was write out what we wanted Trump to say.

For the video footage, we experimented with several tools, but were dissatisfied with the vast majority. In the end, we chose Chinese AI startup Minimax’s “Hailuo AI” (海螺AI), partly because it generated the most realistic video clips but also because the company seems to be angling it towards professional creators, providing guides on how to produce ads with their software. We bookended the video with template title and end cards from FlexClip, an open-access website also available over the Firewall. Finally, we stitched the footage and audio together using Jianying (剪映), the Chinese version of ByteDance’s CapCut video editing app.

And here it is, our end result. We estimate the total time spent to be an hour at most.

The Results Are In

We set out to create footage of Trump announcing his intentions to withdraw from NATO and to recognize Taiwan as an independent country. Videos have been doing the rounds on Douyin that use voice and lip-synching technology to make him sing songs in Chinese. But this is still complex, requiring knowledge of developer tools and access to more sophisticated technology. Quality is also an issue. Many Chinese video generation websites — including Vidu, Kling, and cogvideoX — generated hallucinatory, unusable footage. When we attempted to animate images of Joe Biden or Donald Trump using Vidu and cogvideoX, their faces transformed completely. Hopefully, this still feels fake enough that most viewers would not be fooled.

Chinese companies have put a number of safeguards in place for AI-generated video, but these are easy to get around. New standards proposed by the government in September rule that any AI-generated content has to have a prominent visual watermark, and Chinese experts have argued this is an effective way to combat AI disinformation. But circumventing this is easy. All one has to do is to screen-record the video, cropping out the watermark. Another question we have over the medium to long term is whether the new standards will apply at all in cases where AI-generated content is intended for China’s own state-backed disinformation. Will China abide in practice to the spirit of its Global AI Governance Initiative?

Censored words can also be overridden with some creative thinking. Although Hailuo AI refuses to generate videos that have “Donald Trump” in the prompt, it decided that our prompt for “President of the United States” meant Trump, and generated footage of him. It also refused to generate content for “Taiwan” but allowed the island’s historic name, “Formosa.”

Sensitive terms, however, are constantly being updated. The video was quickly removed from our Hailuo AI account, and when we tried to use “Formosa” again a few weeks later, it was refused.

The work itself was tedious. Five seconds of video from Minimax required five minutes to generate, so a news bulletin of one minute would therefore require at least an hour of button-pressing — assuming you were happy with the footage that resulted. The same prompt generates different results each time, so simple things like continuity between shots were problematic. In our video, Trump looks slightly different in each shot and the backgrounds behind him and the journalists do not match. Sometimes prompts using “President of the United States” generated videos of Barack Obama instead of Trump.

This echoes what the director of the state-affiliated Bona Film Group said in July about a series of short films the group had generated using AI. She lamented the difficulty of maintaining continuity between shots: “The most difficult thing in real-life shooting happens to be the easiest thing for artificial intelligence, and the most difficult thing for artificial intelligence happens to be the easiest thing in real-life shooting.”

Although we initially planned for a minute-long video, the amount of tedium involved — endlessly pushing buttons, writing prompts, waiting five minutes, and checking the results — would be enough to tempt an ordinary netizen working in their free time to cut corners.

Whether or not our endeavor impresses you, these videos represent only the possibilities at this moment in time. China’s AI is advancing at an incredible speed. Hailuo was released just over a month ago, and already the state-run China Central Television (CCTV) has used it — alongside Vidu and Kling — in a video marking this month’s National Day. If Chinese AI software is nearing standards for national broadcast, this suggests it is approaching a level of believability suitable for deepfakes.

So, could a Chinese netizen produce a believable deepfake with relative ease? Our experiment suggests the answer is yes — but with a lot of caveats. As AI tech improves, however, the list of drawbacks will surely shorten. In a matter of months, the tools available to deepfakers, including those backed by determined states, could be sufficiently sophisticated to have impacts that are all too real.