It was a terrible answer to a naive question. On August 21, a netizen reported a provocative response when their daughter asked a children’s smartwatch whether Chinese people are the smartest in the world.

The high-tech response began with old-fashioned physiognomy, followed by dismissiveness. “Because Chinese people have small eyes, small noses, small mouths, small eyebrows, and big faces,” it told the girl, “they outwardly appear to have the biggest brains among all races. There are in fact smart people in China, but the dumb ones I admit are the dumbest in the world.” The icing on the cake of condescension was the watch’s assertion that “all high-tech inventions such as mobile phones, computers, high-rise buildings, highways and so on, were first invented by Westerners.”

Naturally, this did not go down well on the Chinese internet. Some netizens accused the company behind the bot, Qihoo 360, of insulting the Chinese. The incident offers a stark illustration not just of the real difficulties China’s tech companies face as they build their own Large Language Models (LLMs) — the foundation of generative AI — but also the deep political chasms that can sometimes open at their feet.

Qihoo Do You Think You Are?

In a statement on the issue, Qihoo 360 CEO Zhou Hongyi (周鸿祎) said the watch was not equipped with its most up-to-date AI. It was installed with tech dating back more than two years to May 2022, before the likes of ChatGPT entered the market. “It answers questions not through artificial intelligence,” he said, “but by crawling information from public websites on the Internet.”

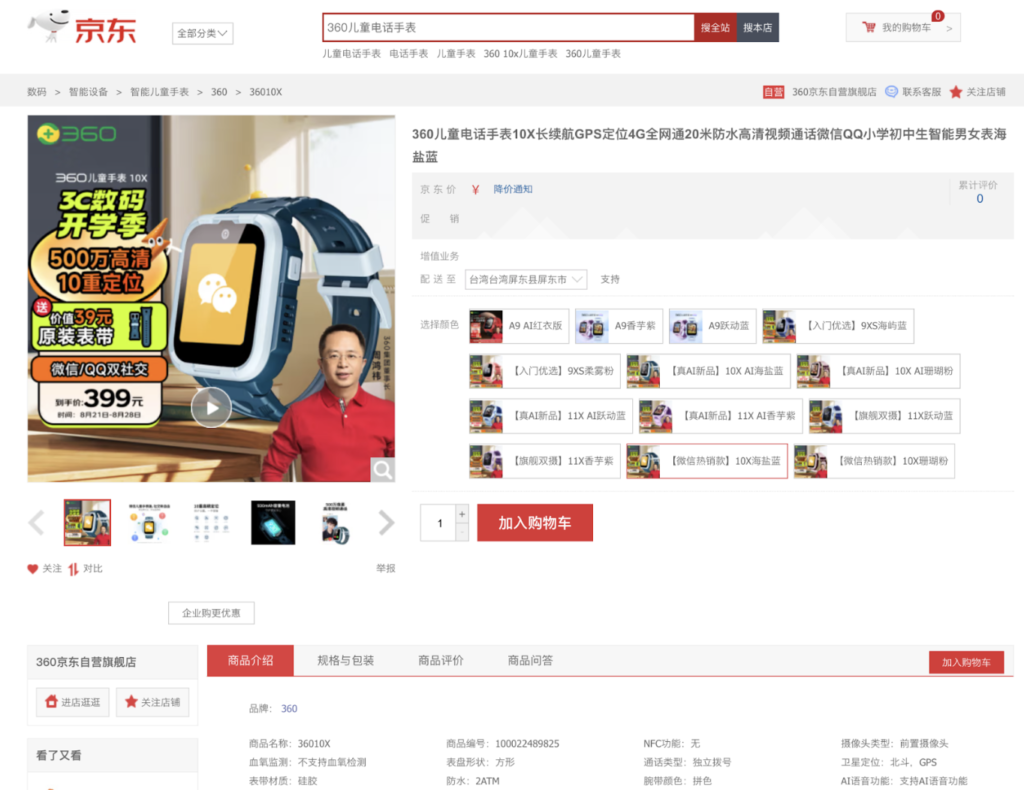

The marketing team at Qihoo 360, one of the biggest tech companies invested in Chinese AI, seems to disagree. The watch has indeed been on sale since at least June 2022, meaning its technology can already be considered ancient in the rapidly developing field of AI. But they have been selling it on JD.com as having an “AI voice support function.” We should also note that Qihoo 360 has a history of denials about software on its children’s watches. So should we be taking Qihoo 360 at its word?

Zhou added, however, that even the latest AI could not avoid such missteps and offenses. He said that, at present, “there is a universally recognized problem with artificial intelligence, which is that it will produce hallucinations — that is, it will sometimes talk nonsense.”

Model Mirage

“Hallucinations” occur when an LLM combines different pieces of data together to create an answer that is incorrect at best, and offensive or illegal at worst. This would not be the first time that the LLM of a big Chinese tech company said the wrong thing. Ten months ago, the “Spark” (星火) LLM created by Chinese firm iFLYTEK, another industry champion, had to go back to the drawing board after it was accused of politically bad-mouthing Mao Zedong. The company’s share price plunged 10 percent.

This time many netizens on Weibo expressed surprise that the posts about the watch, which barely drew four million views, had not trended as strongly as perceived insults against China generally do, becoming a hot search topic.

For nearly any LLM today, the hallucinations Zhou Hongyi referred to are impossible to have total control over. For those wanting to trip them up to create humorous or embarrassing results, or even to override safety mechanisms — a practice known in the West as “jailbreaking” — this remains relatively easy to do. This presents a huge challenge for Chinese tech companies in particular, which have been strictly regulated to ensure political compliance and curb incorrect information, even as they are in a “Hundred Model War” push to generate and develop LLMs.

As China’s engineers know only too well, it is not possible to plug all the holes. Reporting on the Qihoo story, the Beijing News (新京报) said hallucinations are part of the territory when it comes to LLMs, quoting one anonymous expert as saying that it was “difficult to do exhaustive prevention and control.” Interviewees told the Beijing News that steps can be taken to minimize untrue or illegal language generated by hallucinations, but that removing the problem altogether is impossible. In a telling sign of the risks inherent in acknowledging these limitations, none of these sources wanted to be named.

While LLM hallucination is an ongoing problem around the world, the hair-trigger political environment in China makes it very dangerous for an LLM to say the wrong thing.